Building a face recognition API with face_recognition, Flask and PostgreSQL

— Python, PostgreSQL, Technology — 3 min read

A couple of months ago I read about PimEyes a service that allows for finding images across the web that contain a matching face. There’s a little controversy behind the service because it doesn’t vet its users strictly which means that the system can be abused.

This got me thinking about how such a technology was built, as the face recognition libraries I had seen in the past required loading in images to conduct a comparison and at the scale that Pimeyes would be operating on this would be really inefficient.

In order to return results across multiple faces quickly there would need to be some alternative means of carrying out this face comparison on the database layer.

After a bit of searching I found an excellent, but broken example using PostgreSQL’s cube extension

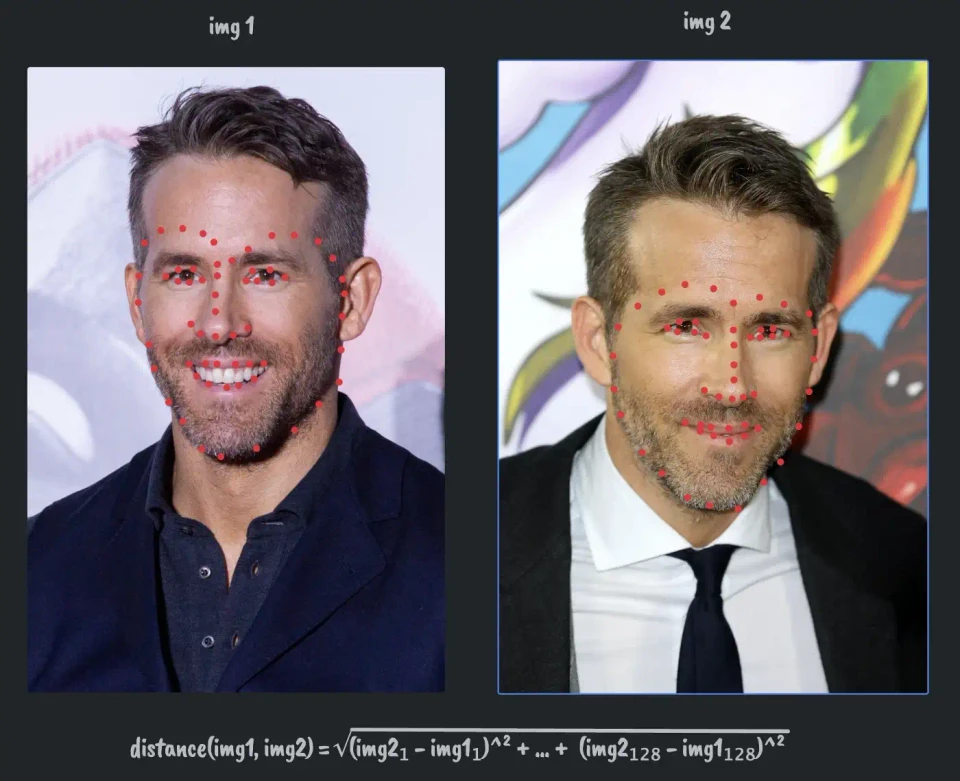

that saves two vectors of the face’s descriptors and uses euclidean distance to return records that are above a certain threshold.

After fixing the incorrect SQL statement I was able to save an image’s descriptors and then use another image’s to

return the original image as a match.

How it works

The Python code makes use of a couple of libraries:

opencv— Used to read the image to be processeddlib— Used to load a Histogram of Oriented Gradients (HOG) face detector used to find faces in the uploaded images, returns a list of coordinates in the image for a bounding rectangle of the faceface_recognition— Used to get a list of face descriptor encodings, a 128-dimensional array of the points for the faces landmarks

Just a heads up — dlib can be a pain to install, especially on a Mac. The face_recognition repo has a good set of instructions of installing the library https://github.com/ageitgey/face_recognition

The PostgreSQL database saves the 128-dimension array as two 64-dimension cubes as Postgres supports 64-dimension cubes

out of the box (there are ways to increase this size if needed).

When querying the database to find matching faces the database compares the Euclidean distance between the two input

64-dimension cubes and the cubes in the records with a smaller Euclidean distance, meaning a face that is more

similar to the face used as input.

Creating a basic application

The example I based my work off came with two scripts, one for adding a face to the database and another for querying the database for matching faces in the database. These scripts worked well but were limited to local images, in order to build something a little more useful I wrapped those scripts in a Flask API, allowing for users to POST images to be added and searched with.

1import postgresql2

3db = postgresql.open('pq://user:pass@localhost:5434/db')4db.execute("create extension if not exists cube;")5db.execute("drop table if exists vectors")6db.execute("create table vectors (id serial, file varchar, image_id numeric, vec_low cube, vec_high cube);")7db.execute("create index vectors_vec_idx on vectors (vec_low, vec_high);")For the API to work the database needs to be set up with the cube extension enabled and the tables created.

1from flask import Flask, request, jsonify, make_response2from werkzeug.utils import secure_filename3import dlib4import cv25import face_recognition6import os7import postgresql8

9

10app = Flask(__name__)11app.config['MAX_CONTENT_LENGTH'] = 10 * 1024 * 102412app.config['UPLOAD_EXTENSIONS'] = ['.jpg', '.png', '.jpeg']13app.config['UPLOAD_PATH'] = 'uploads'14

15

16def save_face_to_db(local_filename, remote_file_url, image_id):17 # Create a HOG face detector using the built-in dlib class18 face_detector = dlib.get_frontal_face_detector()19

20 # Load the image21 image = cv2.imread(local_filename)22

23 # Run the HOG face detector on the image data24 detected_faces = face_detector(image, 1)25 print("Found {} faces in the image file {}".format(len(detected_faces), local_filename))26

27 db = postgresql.open(os.environ.get('DATABASE_STRING'))28

29 # Loop through each face we found in the image30 face_ids = []31 for i, face_rect in enumerate(detected_faces):32 # Detected faces are returned as an object with the coordinates33 # of the top, left, right and bottom edges34 print("- Face #{} found at Left: {} Top: {} Right: {} Bottom: {}".format(i, face_rect.left(), face_rect.top(),35 face_rect.right(), face_rect.bottom()))36 crop = image[face_rect.top():face_rect.bottom(), face_rect.left():face_rect.right()]37 encodings = face_recognition.face_encodings(crop)38

39 if len(encodings) > 0:40 query = "INSERT INTO vectors (file, image_id, vec_low, vec_high) VALUES ('{}', {}, CUBE(array[{}]), CUBE(array[{}])) RETURNING id".format(41 remote_file_url,42 image_id,43 ','.join(str(s) for s in encodings[0][0:64]),44 ','.join(str(s) for s in encodings[0][64:128]),45 )46 response = db.query(query)47 face_ids.append(response[0][0])48 return face_ids49

50

51@app.route("/faces", methods=['POST'])52def faces():53 uploaded_file = request.files['file']54 file_url = request.form.get('url', False)55 image_id = request.form.get('image_id', 0)56 filename = secure_filename(uploaded_file.filename)57 if filename != '' and file_url and image_id:58 file_ext = os.path.splitext(file_url)[1]59 if file_ext in app.config['UPLOAD_EXTENSIONS']:60 file_path = os.path.join(app.config['UPLOAD_PATH'], filename)61 uploaded_file.save(file_path)62 face_added = save_face_to_db(file_path, file_url, image_id)63 os.remove(file_path)64 if face_added:65 return make_response(jsonify(status='PROCESSED', ids=face_added), 201)66 else:67 return make_response(jsonify(status='FAILED'), 500)68 return make_response(jsonify(error='Invalid file or URL'), 400)69

70 71 72if __name__ == "__main__":73 app.run(debug=True, port=9000)When adding a face I wanted to return the ID that the face was saved under in the database so systems that use the API

have a means of linking image records to the records in the system (this linking is already handled in the database via

the image_id and file columns).

1from flask import Flask, request, jsonify, make_response2from werkzeug.utils import secure_filename3import dlib4import cv25import face_recognition6import os7import postgresql8

9

10app = Flask(__name__)11app.config['MAX_CONTENT_LENGTH'] = 10 * 1024 * 102412app.config['UPLOAD_EXTENSIONS'] = ['.jpg', '.png', '.jpeg']13app.config['UPLOAD_PATH'] = 'uploads'14

15

16def process_face_matches(face_matches):17 results = []18 for face_match in face_matches:19 results.append({20 'id': face_match[0],21 'file': face_match[1],22 'image_id': face_match[2]23 })24 return results25

26

27def find_face_in_db(local_filename):28 # Create a HOG face detector using the built-in dlib class29 face_detector = dlib.get_frontal_face_detector()30

31 # Load the image32 image = cv2.imread(local_filename)33

34 # Run the HOG face detector on the image data35 detected_faces = face_detector(image, 1)36

37 print("Found {} faces in the image file {}".format(len(detected_faces), local_filename))38

39 db = postgresql.open(os.environ.get('DATABASE_STRING'))40

41 # Loop through each face we found in the image42 face_records = []43 for i, face_rect in enumerate(detected_faces):44 # Detected faces are returned as an object with the coordinates45 # of the top, left, right and bottom edges46 print("- Face #{} found at Left: {} Top: {} Right: {} Bottom: {}".format(i, face_rect.left(), face_rect.top(),47 face_rect.right(), face_rect.bottom()))48 crop = image[face_rect.top():face_rect.bottom(), face_rect.left():face_rect.right()]49

50 encodings = face_recognition.face_encodings(crop)51 threshold = 0.652 if len(encodings) > 0:53 query = "SELECT id, file, image_id FROM vectors WHERE sqrt(power(CUBE(array[{}]) <-> vec_low, 2) + power(CUBE(array[{}]) <-> vec_high, 2)) <= {} ".format(54 ','.join(str(s) for s in encodings[0][0:64]),55 ','.join(str(s) for s in encodings[0][64:128]),56 threshold,57 ) + \58 "ORDER BY sqrt(power(CUBE(array[{}]) <-> vec_low, 2) + power(CUBE(array[{}]) <-> vec_high, 2)) ASC".format(59 ','.join(str(s) for s in encodings[0][0:64]),60 ','.join(str(s) for s in encodings[0][64:128]),61 )62 face_record = db.query(query)63 face_records.append(process_face_matches(face_record))64 return face_records65

66

67@app.route('/faces/searches', methods=['POST'])68def faces_searches():69 uploaded_file = request.files['file']70 filename = secure_filename(uploaded_file.filename)71 if filename != '':72 file_ext = os.path.splitext(filename)[1]73 if file_ext in app.config['UPLOAD_EXTENSIONS']:74 file_path = os.path.join(app.config['UPLOAD_PATH'], filename)75 uploaded_file.save(file_path)76 face_matches = find_face_in_db(file_path)77 os.remove(file_path)78 return make_response(jsonify(matches=face_matches), 200)79 return make_response(jsonify(error='Invalid file'), 400)80

81

82if __name__ == "__main__":83 app.run(debug=True, port=9000)When searching for a face the API returns the file and image_id values stored against the matching faces so

consuming systems can return those as part of their response.

Further applications

There’s a few ways the face recognition processes could be built into more advanced applications. The following sprung to mind as I was working on it:

- Integrating into a CMS such as Django by building an extension that uses the Python code to find faces in images uploaded by users and storing these as a separate model which links the image to the faces found and can be used in queries or to build a graph of images in order to explore the relationships between those in the images

- Integrating into other CMSs in a similar matter by calling the API to add and find faces, although it might be easier to use a face recognition library in the language the CMS is written in to reduce execution time (I started looking at doing this with face-api.js and Strapi for NodeJS)

- Building a specialised web crawler to create a face search for websites that can’t rely on tagged data such as event photography websites so that users could use a webcam capture to find images of themselves across all the images taken

Summary

I was quite happy with the discoveries I’d made building this basic API wrapper. I learned about a few new concepts like

PostgreSQL’s cube type and how face recognition works.

I’m currently playing around with Strapi, seeing how I could extend it’s functionality to do create a graph of faces in different images so these can be queried via GraphQL as I think this will be an interesting application of the technology.