Things learned from being part of a failed startup

— Product Development, Software Development — 13 min read

It’s my turn to do that post. The startup I was working at closed a few weeks ago and, in the midst of frantic job-hunting and understanding what I want to do now I thought I’d take some time to write up what led it to fail. The part I played in the company was a junior developer for one year before they removed the CTO and I became a development team lead and then a few months later a Software Development Manager. Most of the decisions that led to the downfall of the company happened in the first year, the side effects of these rippling through the next two years.

Root Cause Analysis

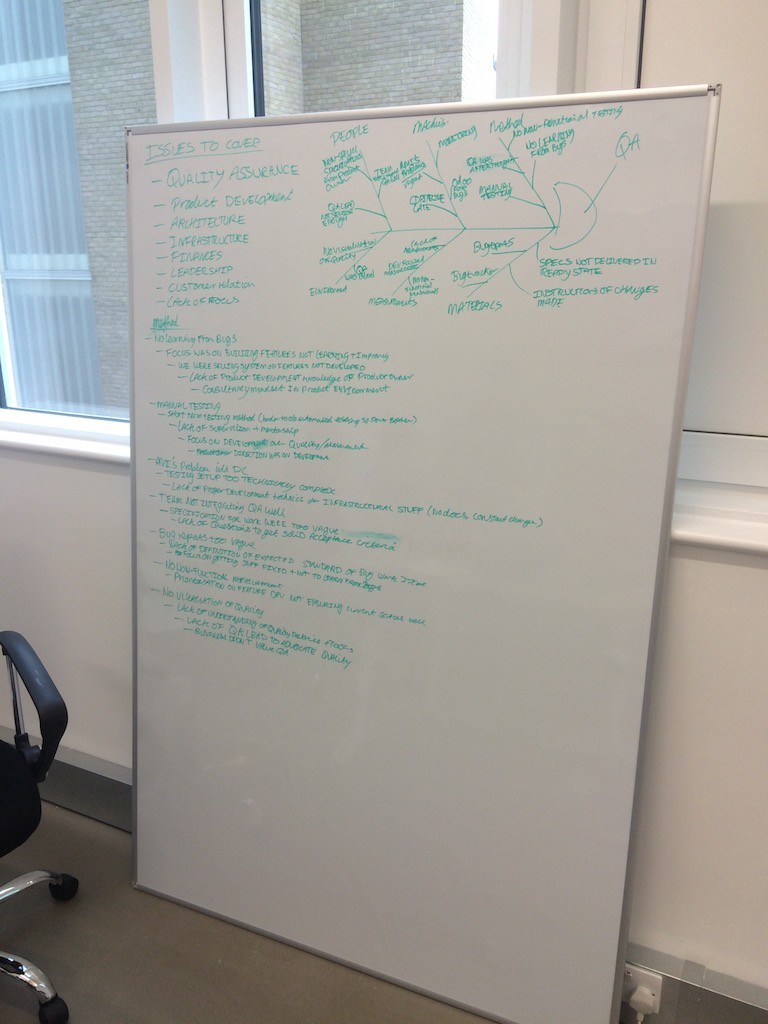

After the company closed its doors I asked the team and the managing directors to carry out a root cause analysis of what decisions and factors led to the situation we found ourselves in. This involved listing out a few topics we wanted to discuss and for each drawing up a fishbone (ishikawa) diagram within which we listed items for discussion on each of the six stems which represent:

- Methods: The way the team approached the topic

- Machines: The systems and processes we used to help us with the topic

- People: The roles in the team that were involved in the topic

- Materials: The input the team received around the topic

- Measurements: How the team measured their effectiveness around the topic

- Environment: The factors external to the team around the topic

For each of item on the stem we then asked ourselves why these things happened recursively upto five times. This allowed us to paint a good picture of what led to the behaviours the company and the team developed in these topics.

Here’s our fishbone and why’s for quality assurance:

Unfortunately due to time constraints we were only really able to discuss three topics; quality, product development and software architecture but these topics alone allowed us to learn so much about how the company worked from the start until it closed.

Here’s a write up of these topics.

Quality

Ultimately there was a lack of ownership over quality, mostly due to a focus on feature delivery but also due to management trusting people to be developing with quality in mind and not building quality checks into the specification.

This lack of quality acceptance criteria meant we were not aware of the bad practices being carried out until way too late in the process which in turn led to slower delivery speeds and a lot of rework.

Sacrificed quality for feature delivery

The way the company conducted itself was to sell the idea of the product to a client and then to build the product they wanted quickly, instead of building a product and getting customers this way. This meant a lack of a roadmap or solid specification and ultimately meant that the development team were focused on churning out features to keep clients happy without putting the appropriate quality checks in place to ensure these features were performing as intended.

No one took ownership over quality

While the company frantically dashed to get features out to customers we never bothered to measure the quality of the work being done. In the early days quality assurance was part of the CTO’s duties but the activities this person did were not scrutinised by the other senior managers which meant when they failed to get the development team to take ownership of the quality of the code they were writing no one picked up on this.

Eventually I led an effort to try and turn this around but by then it was too late and we struggled immensely to get back on track. We set up SonarQube to help us see the quality of the code we were writing, set up a Continuous Delivery pipeline to build quality assurance into every aspect of our work and focused on ensuring that all specifications had a set of measurable acceptance criteria so we could verify we were building quality features as we developed the product further.

The problem is that the more you focus on quality the more you unearth the effects of poor quality assurance practices from past work, this makes those who wrote the code unhappy as they feel bad for what they did even though the problem was actually a lack of leadership focus on quality and not them being bad developers. Part of building a sense of ownership is to not build a blame culture (self or otherwise) and instead help people take ownership over fixing the problem and giving them the tools and knowledge on how to solve these problems.

We didn’t practice iterative development as a company

The company conducted most of its work within the context of iterative development, however this only really ever applied to the development team. This meant the business, infrastructure and client sides of the equation were all working at different paces to that of the development team and meant we were often out of sync with each other.

One of the biggest day to day issues came from the lack of joining our infrastructural changes to the development iterations, developers would often find that servers or key tools have been moved or shut down without much notice. This led to a degree of tension between the two parties as the developers felt they could not depend on the infrastructure which they viewed as being out of their control. We eventually started to take ownership over most of the common tools we used such as Continuous Integration, Source Control and Continuous Delivery to remove this problem.

Another big issue we had was failing to negotiate the availability of subject matter experts to validate the work being done at core parts of the development iteration. This meant we were mostly blindly pushing our increments into the hands of the user without checking with them first that we were building what they wanted. Without this vital feedback loop we would find out the user didn’t like the changes until it was production at which point every misaligned feature development cost us massive amounts of trust in our abilities from the client.

We didn’t measure ourselves or our processes

In the early days of the company we practiced Scrum but never paid any attention to the ceremonies of Scrum apart from the daily scrum / stand up, we didn’t have retrospectives and we most certainly didn’t use burn down/up charts to show our performance in the sprint — to be honest we didn’t even really use any notion of a sprint. This meant that we were unable to really understand how we conducted the work and how to improve our efforts.

We eventually moved to Kanban but without a solid analysis stage we never had any notion of how the work being done could be measured against the greater development effort. We learned a little too late that the cycle time isn’t a good metric to measure ourselves against as our lead time was terrible because we never pushed any of the work into production and measuring the development team’s cycle time was just hiding this fact. Thanks to a presentation from one of the senior managers everyone in the company learned how Kanban helped to visualise the work flow but we never truly worked together to remove the blockers.

Finally for the last three months of the company I managed to move the development team back to Scrum (note — not the company) and we eventually started to measure our ability to deliver, adhered to the Scrum ceremonies and in the last week before the company closed we had our first sprint where we accurately estimated the work and delivered on time! The team also started to take ownership over the inputs and outputs of the work it carried out and started to measure these too while I found a way to automate the measurements I was making of the team’s belief in our ability to deliver as well as how happy they were doing the work (health and happiness) using a survey as part of the retrospective.

Product Development

The initial business plan was to create a platform on which the plan was to build a series of products. This would allow for the company to quickly create a portfolio of products to be sold with the platform allowing for each product to integrate with each other. However due to a lack of ownership for these products and quality issues the products took too long to deliver and suffered from bad quality which in turn cost us the clients who were putting up the cash to fund the product development. This lack of product owner meant that there was often no single person in charge of making executive decisions on how to evolve the products or platform.

There was a product but no product team behind it

Due to the way that the product was developed there was never a product centric view of the work and no clear roadmap. This way of working combined with no clear product owner meant that the company struggled to effectively build a product. While we had Business Analyst, CTO/Software Development Manager and User Experience staff in the company we never truly managed to work together to form a product team.

During the early days there apparently was some notion of a product team to help steer the way the product was developed but unfortunately the CTO didn’t seem to provide much input about the technical capabilities of the product which meant the product’s technical constraints were discovered in production. Without the business, technical and user needs being negotiated before any development took place there was no clearly validated goal, acceptance criteria or focus for the development team to conduct their work against.

The solution to having no clear product owner was to delegate to a design committee

The company’s way of dealing with the lack of product ownership was to move this role to a Community Interest Company (CIC) which would be made up of a group of users of the software who would hold meetings and decide as a group on what features would be built into future releases of the product. The CIC structure never materialised due to a lack of client’s to start it as well as a lack of help from a national programme the product was part of, though I do wonder how well this would have worked as essentially this leads to design by committee.

We never really validated the features we developed

As stated in the quality section above we never validated the features we built with the end user before shipping to production but this lack of user input is also true of the product development process too. As we focused on building the product on the requirements given to us by clients who ultimately funded the development (having been sold the notion of these features existing) we never got a full user centric view of these features and never validated that the requirements of the one user group we did a pilot for were in fact universal. This of course led to poor architectural decisions as we only had part of the picture to work with and ultimately meant there was quite a lot of changes between customers as well as the implementation of the product having more of a change management element than was anticipated.

We did eventually hire someone to take care of User Experience (UX) but it was hard to integrate their work into the requirements gathering/specification method being used as there wasn’t much chance to have them working closely with the user to help build a better shared understanding of the user’s needs. This is a shame as the company didn’t have some of the problems you hear about in the UX world about having to conduct user research through screen recordings over the internet, we had direct access to the people using the software but were never able to really understand or utilise the opportunity we had.

Another validation technique we failed to utilise was post-deployment user feedback. We had a very basic monitoring platform which allowed us to keep an eye on server performance, average response time and user load but we never took action on any of these metrics and we depended on this data to give us a holistic view way too much. One key example of this lack of validation was when a year into a pilot it was revealed that the users were logging out and logging in again to see the results of the data entry they had carried out due to the slow server response times. If we had been onsite validating the functional and non-functional aspects of the product we would have caught this much, much earlier.

Architecture

We made two iterations of the product architecture but both of them started life as Rapid Application Development (RAD) prototypes that were then built upon instead of these RADs being used to learn from. The second iteration was architected by a senior developer who worked alone on the architecture and never validated the scalability, this was due to a lack of technical acceptance criteria and trust the company had in people doing their job properly. The silo the senior developer created led to issues with the other developers working within the design as the senior developer had made a few performance penalty inducing decisions.

We made RADs the foundations of what we built instead of using them to test ideas

While I don’t necessarily think building on a RAD was the entire reason for the architectural issues we encountered I do feel our lack of proper validation of the RAD before jumping onboard with this solution was a bad idea. In both iterations of the architecture we failed to build more than one RAD and we failed to test the RADs non-functional abilities such as how it handled under heavy load.

As mentioned in the previous topics we only validated that the architecture met the business needs and not the technical needs so while the second iteration gave the business all the data they needed to carry out their analytics and present these to the management users of the product (not the core users) it did not take into consideration the amount of time nor processing power needed to actually add some data into the product which is exactly what the core users required. As we had no testing in place to prove these users would not be impact we only found this out in development.

Later on when we started to build the Continuous Delivery pipeline we made a set of tests to track the amount of time these actions took by running the test suite in production (using a duplicated database) and seeing how the product scaled in production. However this came about too late in the game to really be effective.

Solution was architected by one person not a team

As the first iteration of the architecture was in production the company hired a senior developer to architect a new solution based on the issues that where discovered from use of the first iteration. The senior developer they hired was given a job and seniority based on the fact they had one years experience working with the framework that met the business needs of the company but they were not a senior developer in the way they acted towards more junior members of staff. The senior developer in question spent two months in a silo thinking about the solution they were creating before announcing it complete and everyone moving the product over to the solution they created.

This led to issues where the mapping system they had created caused all kinds of problems to the point where a member of staff ended up writing a basic API like abstraction over it just so we could use it. Eventually we ended up removing the mapping system all together as well as breaking out some of the functionality in the modules the senior developer created as they had built a monolithic module that did everything. If we had designed the new architecture as a team the knowledge and skills amongst us would have led to a more robust and easy to work with architecture.

Staff and Culture

While I have definitely benefited from the lenience the senior management team showed to the people they hired it’s also true that this did mean that people who should have been let go were not fired in a timely manner due to a lack of performance management. The culture of the company was something that we had to change over time, at the start the emphasis was on hacking things to get them to work but once I started leading the team we changed the culture to reflect the benefits of evidence based engineering over hacking, this also changed the way the company looked at the responsibilities of the senior developer towards more of a coaching role.

Staff were not performance managed

During my time in the company I had an appraisal after the first three months then one after two years. The lack of a formal feedback loop on the performance of staff as well as the lack of proper retrospectives until I introduced Scrum meant that unless a staff member was very self-motivated then there was very little to show that staff were working in the right direction. This ties in with the trusting of staff to do their job that led to people exploiting this trust and wasting company resources and money as there were no formal processes in place to show people were underperforming.

As a Software Development Manager I held one-to-ones with the development team mostly to ensure that people were enjoying their work, giving feedback on some things that people may have let their standards slide on and to help people develop their skills better but this was only for the development team, not the entire company and there was no senior management voice to back these conversations up.

The company did start to take steps towards performance managing staff by using the Skills For the Information Age (SFIA) framework to give people job descriptions and levels but this was not formally introduced before the company closed and those who were underperforming were not informed of this by senior management.

The culture of the company at the start was around hacking

Although when I started to lead and manage the team I changed the culture of (at least) the development team to be one of evidence based engineering the initial culture was around hacking software together to show that we could do things the big players in the industry could do very quickly and for far less money. The problem with this approach, as I’m sure anyone who has bought a imitation of a luxury item will tell you, is that it does not hold up in the long run. I’m relatively sure this culture is what led to a lack of quality in the development work carried out in those early days.

The hardest part about breaking a culture is the fact that if people have developed or found it easy to work within the culture then they will struggle to adopt the new culture. Luckily one of the senior managers shared my want of a more evidence based culture so this made adoption easier and the one-to-ones I held with the development team helped them to see the benefits of doing things in a more engineering way but those not directly involved in the development team found it harder to adjust.

In conclusion

Here’s the TL;DR:

- It’s important to have everyone taking ownership over quality and product direction

- Don’t expect people to do everything to the same high standards you have, if you want those standards then measure their output and work with them to meet them

- If you’re going to practice iterative development then don’t focus solely on the development team include all relevant parties in the iteration to reduce communication issues

- Validate your potential features and architectural decisions to ensure they are the going to do the job correctly