Using Allure and Jest to improve test reporting

— Testing, Software Development — 4 min read

I’ve recently started working on a new React Native project and decided to set up standards to ensure a high level of quality was baked into the development process.

This process means that there’s a high level of unit testing and integration testing which can, as the code base grows, become hard to keep tabs on due to test suites growing or becoming fragmented in order to make it cleaner code.

I’ve used Allure in the past but never really saw the value in it aside from the fact it allowed for some fancier pie-charts and if Gherkin was used then you’d get the feature files added to the ‘behaviour’ tab.

I’ve also encountered issues getting Allure to work with Jest as there are a number of options ( jest-allure , jest-allure-reporter , allure-jest-circus to name a few) and none of the documentation on npm is really helpful in understanding how to set things up with React Native.

Eventually I found much better documentation on the jest-allure Github which explained how to set up jest-allure via Jest’s setupFilesAfterEnv configuration option and this allowed me to get the full value from Allure.

Setting up jest-allure

In order to use jest-allure you need to first install the package via npm.

With the package installed you then create a jest.config.js file (or add to an existing one) and add the jest-allure/dist/setup as an item under the setupFilesAfterEnv key.

1module.exports = {2 setupFilesAfterEnv: ['jest-allure/dist/setup'],3};You should now see an allure-results directory created when you run your Jest tests now.

Generating a report

While you’ve successfully run the Jest tests with the ability to create the data Allure uses you’re not out of the woods just yet — you still need to run Allure to see the report.

You can download the Allure CLI via the allure-commandline package on NPM, which then allows you to run allure from the terminal.

The main commands you’ll want to use are:

allure generate— This generates the report so you can open it in a browserallure serve— This generates the reports and kicks off a webserver for you

The CLI doesn’t watch for file changes however so you’ll need to run these commands every time you run your tests unless you create a file watcher to re-run the allure generate command and a separate webserver process (something like Python -m http.server ).

Enhancing the report

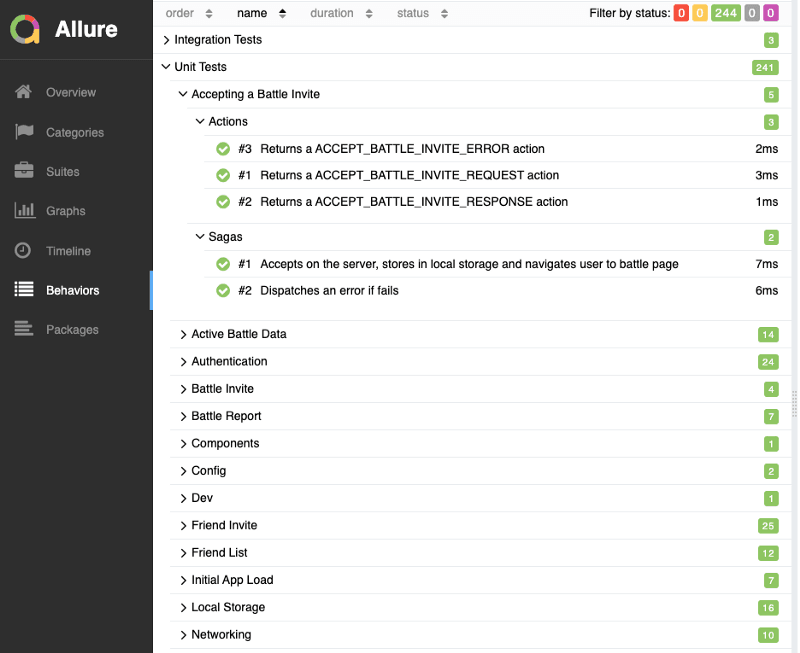

1describe('initialAppLoadSaga', () => {2 beforeEach(() => {3 reporter4 .epic('Unit Tests')5 .feature('Initial App Load')6 .story('Sagas');7 });8 it('Navigates to app if user is authenticated and does not need to register', () => {9 const generator = initialAppLoadSaga(initialAppLoad());10 expect(generator.next().value).toEqual(put(getAuthFromLocalStorage()));11 expect(generator.next().value).toEqual(put(getNeedToRegisterFromLocalStorage()));12 expect(generator.next().value).toEqual(put(localStorageActiveBattleDataRequest()));13 expect(generator.next().value).toEqual(all(eventsToListenTo));14 expect(generator.next(authdPayload).value).toEqual(call(NavigationService.navigate, 'App'));15 expect(generator.next().done).toBe(true);16 });17});The basic Allure report doesn’t offer much more than just a pie-chart of your test results and if you’ve used Gherkin you’ll see your features in the Behaviour tab.

The main value you’ll get from Allure will be if you annotate your tests using the Allure annotation API.

jest-allure adds a reporter object to the global variables available to the test runner, which has the following properties you can use:

- Severity — Set the severity of the code being tested

- Epic — Set the Epic associated with the test (used in the behaviours tab)

- Feature — Set the Feature associated with the test (used in the behaviours tab)

- Story — Set the Story associated with the test (used in the behaviours tab)

- startStep — Used to indicate a step taken when carrying out a test scenario

- endStep — Used to indicate the end of that step

- addArgument —same as

startStepby the looks of it - addEnvironment — Adds environmental information to the report via

addParameter - addAttachment — Adds attachments to the test run

- addLabel — Adds labels to the test run

- addParameter — Adds parameters passed to the code under test to the test run

There are a lot more annotations that Allure supports but as Severity , Epic , Feature and Story all call addLabel you could probably add your own labels for those annotations.

You’ll find a break down of all the features supported by Allure on the Allure website.

Using the report

Once you’ve got the annotations in place on the report and you’ve generated, the last step is to share it with the team.

There are a number of integrations with the various CI tools such as Jenkins that allow the allure-results files to be aggregated with others that have been generated from subsequent test runs but you don’t need these integrations if you just have a means of uploading those files to a file server.

Once you have these files you can then generate the report by means of a standalone Allure service or just by downloading the files and running allure generate locally.

I would suggest however that if you’re going to do this approach it would be worthwhile using the Allure Annotations to add metadata to the report such as:

- The Environment the tests ran in (CI, Local etc)

- The Run ID (if running in CI so you can trace it back to a particular run)

- If you’re running system tests then it’s a good idea to document the servers being run against

- If you’re running the tests on all branches then it’s worth adding which branch so you can filter only on a particular branch

- If you use an issue tracker or user stories it would be worth while adding links to these resources so you have easy access to additional information when interpreting results

While all this reporting functionality is great, the most important thing to think about is what value it’s going to provide the team.

It’s worth talking to the team members to see if there’s any needs they have from reporting (such as spotting flaky tests, reporting on regressions etc) and ensuring these are met before adding all the fancy functionality that only you want to add.

You could also also utilise APIs of issue trackers to add Allure report information to the pages there with links to the report (with some filtering) so users can then click through to test information from within those entities so you have some form of basic bi-directional traceability in place.